Support Vector Machines is a widely used supervised learning algorithm to learn linear and non-linear functions.

Motivation

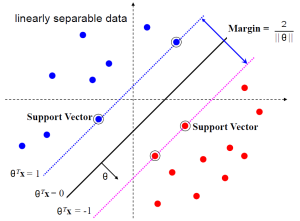

Classifying data is a common task in machine learning. Given a set of training data, each marked as belonging to one of two categories, and the goal is to decide which class a new data point will be in. In Support Vector Machines (SVM) training algorithm, a data point is viewed as a n-dimensional vector, and we want to know whether we can separate such points with a (n-1)-dimensional hyperplane, we call it a Linear Classifier. Ususally there are many hyperplanes that might classify the data. We can choose the best hyperplane as the one that represents the largest separation, or margin, between the two classes. That is, the distance from it to the nearest data point on each side is maximized. The SVM algorithm is to learn (find) such maximum-margin hyperplane or maximum-margin binary classifier.

Linear Classifiers and Margin

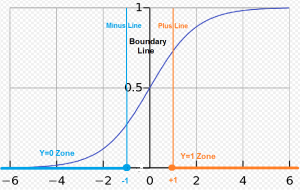

In Logistic Regression, we have the logistic regression hypothesis:

and make the following classfication based the estimated probability:

- When the probability of y =1 is greater than 0.5 then we can predict y = 1;

- Else we predict y = 0.

From the property of Sigmoid function, we can get the equivalent Linear Decision Boundary or Linear Classifier is:

Let us rewrite

Then the Linear Decision Boundary or Linear Classifier is

Now we can modify the Linear Classifier little bit by adding decision margins to get the SVM — We refer to SVM as a Large Margin Classifier:

- If

, we need

, not just

- If

, we need

, not just

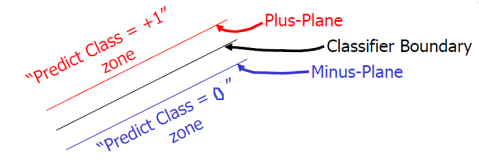

We can translate this Sigmoid function term into hyperplane language. If the training data are linearly separable, we can select two hyperplanes in a way that they separate the data and there are no points between them, and then try to maximize their distance. The region bounded by them is called “the margin”. These hyperplanes can be described by the equations

- Classifier Boundary:

- Plus-Plane:

- Minus-Plane:

Then the classification decision for a new coming example is:

- Positive Class (i.e.

): if

- Negative Class (i.e.

): if

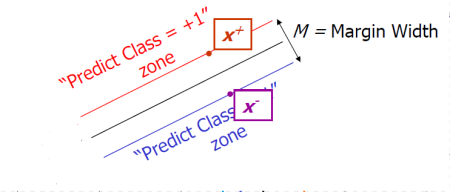

How to Calculate the Margin Width

The distance between the Plus-Plane and Minus-Plane is called the margin width. How to calculate the margin width M in terms of ?

From the Classifier Boundary equation we know the vector

is perpendicular to the Plus-Plane and Minus-Plane.

Let

be any point on the Minus-Plane

be be the closest Plus-plane-point to

.

They are not necessarily example data points. Obviously, the vector is perpendicular to the Plus-Plane and Minus-Plane, and the margin width

.

Therefore, we have the following equations:

- 1.

- 2.

- 3.

(for some value k).

- 4.

Now it is easy to calculate the margin width : Substracting the Eq. 2 from Eq. 1, we have

and then applying the Eq.3, we get

So we have

Finally from Eq.4 we have

The datapoints lying on either the Minus-Plan or the Plus-Plan are called Support Vectors.

SVM – Optimization

Therefore, learning the SVM can be formulated as an optimization program: Search the space to find the widest margin that matches all the training example datapoints. The constraints are

, if

, if

for over all the training dataset.

We can compact this constraint as

Equivalently, from the margin width formula above, we have the optimization problem

s.t. .

This is a quadratic optimization problem subject to linear constraints and there is a unique minimum.

QP is a well-studied class of optimization algorithms to maximize a quadratic function of some real-valued variables subject to linear constraints (linear equality and/or inequality constraints). There exist algorithms for finding such constrained quadratic optima much more efficiently and reliably than gradient ascent method.

![Image [5]](https://lukelushu.files.wordpress.com/2014/08/image-5.png?w=300&h=264)

![Image [13]](https://lukelushu.files.wordpress.com/2014/08/image-13.png?w=274&h=225)

![Image [14]](https://lukelushu.files.wordpress.com/2014/08/image-14.png?w=235&h=221)

![Image [12]](https://lukelushu.files.wordpress.com/2014/08/image-12.png?w=300&h=223)